MCP-Powered AI chatbot Applications

How to build a production-ready AI chatbot that seamlessly integrates real-time stock data using the Model Context Protocol

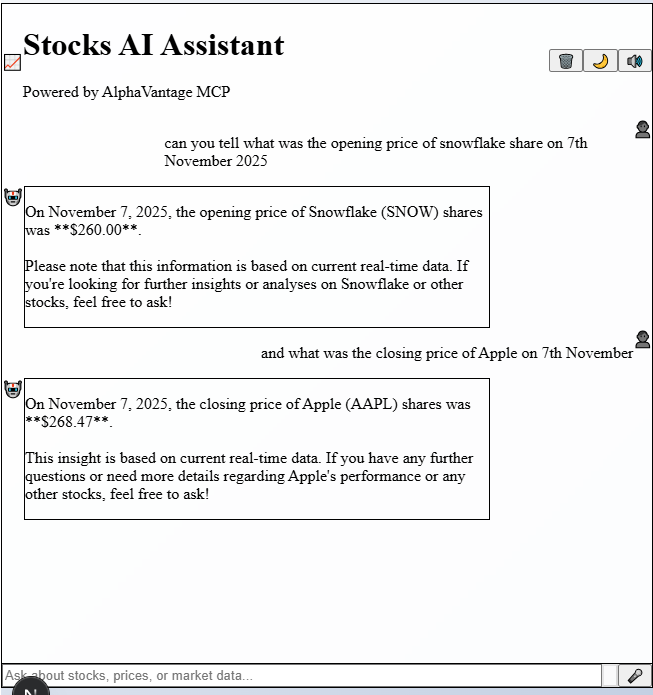

Stocks AI Chatbot

Stocks AI Chatbot

The Challenge: Beyond Simple Chatbots

In the rapidly evolving landscape of AI applications, the gap between basic chatbots and truly intelligent, context-aware agents has never been more apparent. While most financial chatbots rely on static data or simple API calls, I set out to build something fundamentally different: an agentic AI system that could dynamically discover, understand, and utilize external tools in real-time.

The result? A sophisticated stock market chatbot that doesn't just answer questions—it actively reasons about financial data, maintains contextual conversations, and adapts its capabilities on the fly through the Model Context Protocol (MCP).

The Architecture: Where MCP Meets Modern AI

What Makes This Different

Traditional chatbot architectures follow a rigid pattern: user input → predefined API call → formatted response. This approach breaks down when you need:

- Dynamic tool discovery - What if new data sources become available?

- Contextual reasoning - How do you maintain conversation state across complex queries?

- Real-time adaptability - Can your system learn new capabilities without redeployment?

Traditional vs MCP Architecture

mermaid1graph TD 2 subgraph "Traditional Architecture" 3 A1[User Input] --> B1[Static API Call] 4 B1 --> C1[Fixed Response] 5 C1 --> D1[User Output] 6 end 7 8 subgraph "MCP-Powered Architecture" 9 A2[User Input] --> B2[Dynamic Tool Discovery] 10 B2 --> C2[AI Reasoning] 11 C2 --> D2[Tool Selection] 12 D2 --> E2[MCP Tool Execution] 13 E2 --> F2[Contextual Response] 14 F2 --> G2[User Output] 15 16 H2[MCP Server] --> B2 17 H2 --> E2 18 end

My solution leverages the Model Context Protocol to create a truly agentic system that addresses all these challenges.

The MCP Advantage

typescript1// Traditional approach - rigid and limited 2const getStockPrice = async (symbol: string) => { 3 const response = await fetch(`/api/stocks/${symbol}`); 4 return response.json(); 5}; 6 7// MCP approach - dynamic and extensible 8const mcpClient = new MCPClient(); 9const availableTools = await mcpClient.listTools(); 10const result = await mcpClient.callTool('get_stock_quote', { symbol });

The difference is profound. With MCP, my application:

- Discovers tools dynamically from the AlphaVantage MCP server

- Converts MCP tools to OpenAI function calling format automatically

- Executes tool calls via standardized JSON-RPC 2.0 protocol

- Maintains context across multiple tool interactions

Technical Deep Dive: The MCP Integration Layer

Core MCP Client Implementation

The heart of the system is a custom MCP client that bridges the gap between OpenAI's function calling and the Model Context Protocol:

typescript1class MCPClient { 2 private async discoverTools() { 3 const tools = await this.sendRequest('tools/list'); 4 return tools.map(tool => this.convertToOpenAIFormat(tool)); 5 } 6 7 private convertToOpenAIFormat(mcpTool: MCPTool): OpenAIFunction { 8 return { 9 name: mcpTool.name, 10 description: mcpTool.description, 11 parameters: mcpTool.inputSchema 12 }; 13 } 14 15 async executeToolCall(name: string, args: any) { 16 return await this.sendRequest('tools/call', { 17 name, 18 arguments: args 19 }); 20 } 21}

MCP Integration Flow

mermaid1sequenceDiagram 2 participant U as User 3 participant F as Frontend 4 participant A as API Route 5 participant M as MCP Client 6 participant S as MCP Server 7 participant O as OpenAI GPT-4 8 9 U->>F: "What's AAPL price?" 10 F->>A: POST /api/chat 11 A->>M: listTools() 12 M->>S: tools/list (JSON-RPC) 13 S-->>M: Available tools 14 M-->>A: Converted OpenAI functions 15 A->>O: Chat completion with tools 16 O-->>A: Function call decision 17 A->>M: executeToolCall() 18 M->>S: tools/call (JSON-RPC) 19 S-->>M: Stock data 20 M-->>A: Tool result 21 A->>O: Generate response with data 22 O-->>A: Natural language response 23 A-->>F: Formatted response 24 F-->>U: "AAPL is trading at $185.42..."

This abstraction layer enables seamless integration between:

- OpenAI GPT-4 for natural language understanding and generation

- AlphaVantage MCP Server for real-time financial data

- Custom business logic for contextual reasoning

Agentic Conversation Flow

mermaid1flowchart TD 2 A[User Message] --> B{New Conversation?} 3 B -->|Yes| C[Initialize Context] 4 B -->|No| D[Load History] 5 6 C --> E[Discover MCP Tools] 7 D --> E 8 9 E --> F[Send to GPT-4 with Tools] 10 F --> G{Function Call Needed?} 11 12 G -->|No| H[Direct Response] 13 G -->|Yes| I[Execute MCP Tool] 14 15 I --> J[Get Real-time Data] 16 J --> K[Generate Contextual Response] 17 18 H --> L[Update Conversation History] 19 K --> L 20 21 L --> M[Persist to LocalStorage] 22 M --> N[Return to User] 23 24 N --> O{Continue Conversation?} 25 O -->|Yes| D 26 O -->|No| P[End]

The system implements a sophisticated conversation management pattern:

typescript1const conversationFlow = async (userMessage: string, history: Message[]) => { 2 // 1. Discover available tools dynamically 3 const tools = await mcpClient.listTools(); 4 5 // 2. Let GPT-4 reason about tool usage 6 const response = await openai.chat.completions.create({ 7 model: 'gpt-4o-mini', 8 messages: [...history, { role: 'user', content: userMessage }], 9 functions: tools, 10 function_call: 'auto' 11 }); 12 13 // 3. Execute tool calls if needed 14 if (response.function_call) { 15 const toolResult = await mcpClient.executeToolCall( 16 response.function_call.name, 17 JSON.parse(response.function_call.arguments) 18 ); 19 20 // 4. Generate contextual response with tool data 21 return await generateContextualResponse(toolResult, history); 22 } 23 24 return response.choices[0].message.content; 25};

Advanced Features: Beyond Basic Q&A

Contextual Intelligence

mermaid1graph LR 2 subgraph "Context Management" 3 A[Message 1: AAPL?] --> B[Entity: AAPL] 4 C[Message 2: Microsoft?] --> D[Entity: MSFT] 5 E[Message 3: Volume for both?] --> F[Entities: AAPL + MSFT] 6 7 B --> G[Context Store] 8 D --> G 9 F --> G 10 11 G --> H[Smart Query Resolution] 12 end

The system maintains sophisticated conversation context, enabling natural follow-up queries:

User: "What's Apple's current stock price?"

AI: "Apple (AAPL) is currently trading at $185.42..."

User: "How does that compare to Microsoft?"

AI: "Microsoft (MSFT) is at $378.85, which means it's trading at roughly 2x Apple's price..."

User: "What about the volume for both?"

AI: "Looking at today's volume: AAPL has 45.2M shares traded while MSFT shows 28.7M..."

This contextual awareness is achieved through:

- Conversation history management with sliding window context

- Entity extraction to track mentioned stocks across messages

- Semantic understanding of comparative and relational queries

Real-Time Adaptability

The MCP integration enables the system to adapt to new capabilities without code changes:

typescript1// New tools are discovered automatically 2const newTools = await mcpClient.refreshTools(); 3// System immediately gains new capabilities 4// No redeployment required

Voice-Enabled Interactions

The application includes sophisticated voice capabilities:

typescript1const voiceHandler = { 2 startListening: () => { 3 const recognition = new webkitSpeechRecognition(); 4 recognition.onresult = (event) => { 5 const transcript = event.results[0][0].transcript; 6 processUserQuery(transcript); 7 }; 8 }, 9 10 speakResponse: (text: string) => { 11 const utterance = new SpeechSynthesisUtterance(text); 12 speechSynthesis.speak(utterance); 13 } 14};

System Architecture Overview

mermaid1graph TB 2 subgraph "Frontend Layer" 3 UI[React UI] 4 Voice[Voice Interface] 5 Storage[LocalStorage] 6 end 7 8 subgraph "API Layer" 9 Route[Next.js API Route] 10 Auth[Authentication] 11 Cache[Response Cache] 12 end 13 14 subgraph "AI Layer" 15 GPT[OpenAI GPT-4] 16 MCP[MCP Client] 17 Tools[Tool Manager] 18 end 19 20 subgraph "Data Layer" 21 Alpha[AlphaVantage MCP] 22 Market[Market Data] 23 Real[Real-time APIs] 24 end 25 26 UI --> Route 27 Voice --> Route 28 Route --> GPT 29 Route --> MCP 30 MCP --> Tools 31 Tools --> Alpha 32 Alpha --> Market 33 Alpha --> Real 34 35 Storage -.-> UI 36 Cache -.-> Route

Performance & Scalability Considerations

Optimized MCP Communication

The system implements several performance optimizations:

typescript1// Connection pooling for MCP requests 2const mcpPool = new ConnectionPool({ 3 maxConnections: 10, 4 keepAlive: true, 5 timeout: 5000 6}); 7 8// Intelligent caching for tool discovery 9const toolCache = new Map(); 10const getCachedTools = async () => { 11 if (!toolCache.has('tools') || isExpired(toolCache.get('tools'))) { 12 toolCache.set('tools', await mcpClient.listTools()); 13 } 14 return toolCache.get('tools'); 15};

Conversation State Management

Efficient state management ensures smooth user experience:

typescript1// Sliding window context to manage memory 2const manageContext = (messages: Message[]) => { 3 const MAX_CONTEXT = 10; 4 return messages.slice(-MAX_CONTEXT); 5}; 6 7// LocalStorage persistence for session continuity 8const persistConversation = (messages: Message[]) => { 9 localStorage.setItem('chatHistory', JSON.stringify(messages)); 10};

Production Deployment & Monitoring

Deployment Architecture

mermaid1graph TB 2 subgraph "Production Environment" 3 LB[Load Balancer] 4 5 subgraph "Application Tier" 6 App1[Next.js Instance 1] 7 App2[Next.js Instance 2] 8 App3[Next.js Instance N] 9 end 10 11 subgraph "External Services" 12 OpenAI[OpenAI API] 13 MCP_Server[AlphaVantage MCP] 14 end 15 16 subgraph "Monitoring" 17 Logs[Application Logs] 18 Metrics[Performance Metrics] 19 Health[Health Checks] 20 end 21 end 22 23 LB --> App1 24 LB --> App2 25 LB --> App3 26 27 App1 --> OpenAI 28 App1 --> MCP_Server 29 App2 --> OpenAI 30 App2 --> MCP_Server 31 App3 --> OpenAI 32 App3 --> MCP_Server 33 34 App1 --> Logs 35 App2 --> Metrics 36 App3 --> Health

The application is designed for cloud-native deployment:

dockerfile1FROM node:20-alpine 2WORKDIR /app 3COPY package*.json ./ 4RUN npm ci --only=production 5COPY . . 6RUN npm run build 7EXPOSE 3000 8CMD ["npm", "start"]

Error Handling & Resilience

Robust error handling ensures system reliability:

typescript1const resilientMCPCall = async (toolName: string, args: any) => { 2 const maxRetries = 3; 3 let attempt = 0; 4 5 while (attempt < maxRetries) { 6 try { 7 return await mcpClient.callTool(toolName, args); 8 } catch (error) { 9 attempt++; 10 if (attempt === maxRetries) { 11 return { error: 'Service temporarily unavailable' }; 12 } 13 await delay(1000 * attempt); // Exponential backoff 14 } 15 } 16};

Key Technical Achievements

1. Seamless MCP Integration

- Dynamic tool discovery and execution

- Automatic OpenAI function format conversion

- JSON-RPC 2.0 protocol implementation

2. Advanced Conversation Management

- Contextual query understanding

- Multi-turn conversation state

- Intelligent history management

3. Production-Ready Architecture

- TypeScript for type safety

- Comprehensive error handling

- Performance optimizations

- Scalable deployment patterns

4. Modern User Experience

- Real-time voice interactions

- Responsive design with dark mode

- Smooth animations and transitions

- Cross-platform compatibility

The Future of Agentic AI Applications

This project demonstrates the transformative potential of MCP in building truly intelligent AI applications. By standardizing how AI systems discover and interact with external tools, MCP enables:

- Composable AI architectures where capabilities can be mixed and matched

- Dynamic system evolution without requiring code changes

- Standardized integration patterns across different AI providers and data sources

What's Next?

The foundation laid here opens doors to exciting possibilities:

- Multi-modal interactions combining text, voice, and visual data

- Collaborative AI agents that can work together on complex tasks

- Self-improving systems that learn and adapt their tool usage over time

Technical Skills Demonstrated

Through this project, I've showcased expertise in:

AI & Machine Learning:

- OpenAI GPT-4 integration and optimization

- Function calling and tool use patterns

- Conversation state management

- Natural language processing

Modern Web Development:

- Next.js 16 with React 19

- TypeScript for type-safe development

- TailwindCSS for responsive design

- Progressive Web App features

Protocol & Integration:

- Model Context Protocol implementation

- JSON-RPC 2.0 communication

- RESTful API design

- Real-time data integration

DevOps & Production:

- Docker containerization

- Environment configuration

- Performance optimization

- Error handling and monitoring

Conclusion

Building this MCP-powered stock market chatbot has been a journey into the future of AI application development. By leveraging the Model Context Protocol, I've created not just another chatbot, but a glimpse into how AI systems will evolve: dynamic, contextual, and truly intelligent.

The combination of MCP's standardized tool integration, OpenAI's advanced language models, and modern web technologies creates a powerful foundation for the next generation of AI applications. This project proves that with the right architecture and protocols, we can build AI systems that are not just responsive, but genuinely agentic—capable of reasoning, adapting, and growing more capable over time.

Ready to explore the future of AI development? The complete source code and deployment instructions are available in the project repository. Let's build the next generation of intelligent applications together.

Connect & Collaborate

Interested in discussing MCP integration, agentic AI development, or modern web architectures? I'm always excited to connect with fellow developers and explore new possibilities in AI application development.

Technologies Featured: Next.js • TypeScript • OpenAI GPT-4 • Model Context Protocol • AlphaVantage API • TailwindCSS • React 19